Managing IIS Logs with GZipStream

Depending on how many sites your Windows web server is hosting maintaining IIS logs can be a challenge. IIS logs provide valuable insight into the traffic your sites are experiencing as well as detailed SEO metrics and performance data. A typical web server will have just enough free disk space for future growth needs but ultimately will be limited by the capacity of drives in the server. If left unmonitored IIS logs can quickly fill any remaining disk space on the server. There are a few 3rd party tools that are good at compressing log files when they are under one parent folder but when the log files are in different locations such as on a WebsitePanel server I support an alternative solution is needed. In this walkthrough I will demonstrate how I solved this challenge using asp.net and GZipStream.

What about Windows Folder Compression?

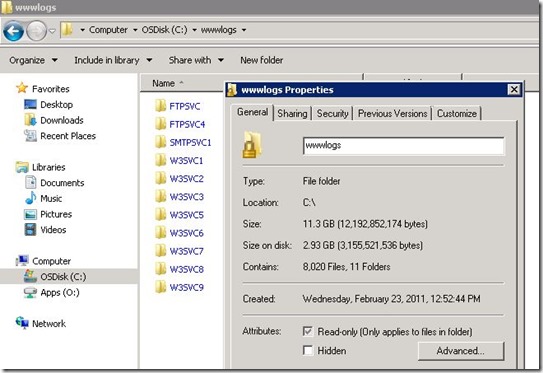

Enabling Windows folder compression should always be the first step to try when freeing up space used by IIS logs. I always redirect the site logs to a folder at the root level of the server so it’s easy to find them and monitor their growth. In the example below I saved 8.3 GB of disk space without having even having to zip any files. However Windows folder compression was not going to work for my WebsitePanel server.

Finding WebsitePanel IIS Logs

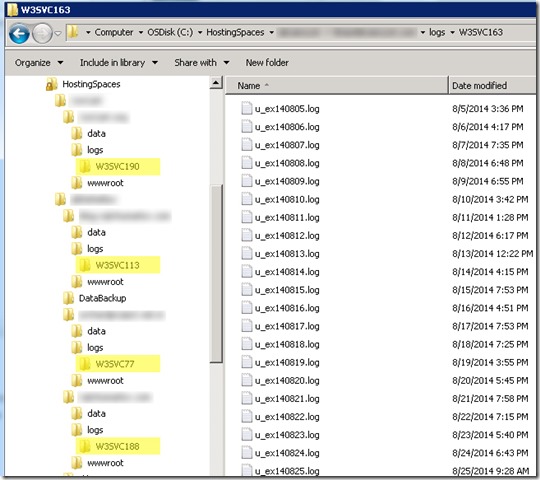

WebsitePanel is a wonderful Windows control panel for Multi-Tennant web hosting and the best part is that it’s free. If you haven’t discovered this incredible application yet check it out. Once installed every aspect of site creation and maintenance is completely automated. As shown in the picture below customer sites are usually deployed with a directory structure like c:\HostingSpaces\customername\sitename. The IIS logs are always stored further down the tree in …\sitename\logs\W3SVCXX. As one can imagine on a web server with hundreds of sites it would be quite a challenge to track down and manually apply Windows compression on all the W3SVC folders. This would be further complicated as new sites are added to the server.

Using Directory.EnumerateFiles and GZipStream

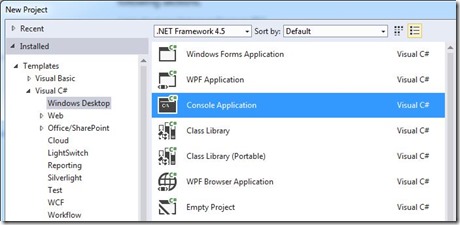

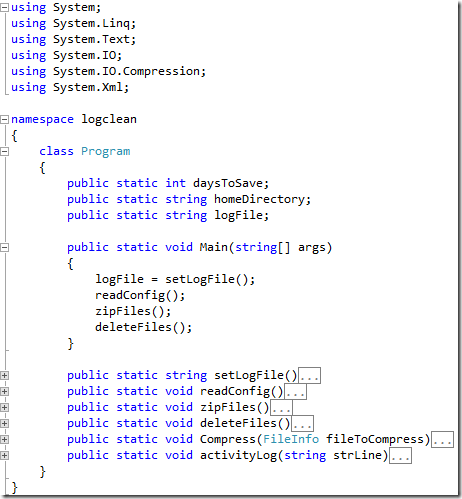

I knew I was going to do some coding to solve my challenge but I also wanted to keep the solution easy to use. In years past I would have considered VBScript folder recursion and executing the PKZip command line tool but I wanted something more contemporary. So I used Visual Studio 2013 and created a Console Application.

The GZipStream class which was introduced in .Net 2.0 would handle the necessary file compression. Since I would only be compressing individual files I didn’t have to deal with using a separate library such as DotNetZip.

public static void Compress(FileInfo fileToCompress) { using (FileStream originalFileStream = fileToCompress.OpenRead()) { if ((File.GetAttributes(fileToCompress.FullName) & FileAttributes.Hidden) != FileAttributes.Hidden & fileToCompress.Extension != ".gz") { using (FileStream compressedFileStream = File.Create(fileToCompress.FullName + ".gz")) { using (GZipStream compressionStream = new GZipStream(compressedFileStream, CompressionMode.Compress)) { originalFileStream.CopyTo(compressionStream); Console.WriteLine("Compressed {0} from {1} to {2} bytes.", fileToCompress.Name, fileToCompress.Length.ToString(), compressedFileStream.Length.ToString()); } } } } }

The Directory.EnumerateFiles method introduced in .Net 4 would use find all the IIS log folders from the WebsitePanel hosting spaces directory tree. Using the searchOption overload would enable me to not only search for .log files but also limit the searching to specific folders matching “W3SVC”. In the snippet below I loop through the search results, compress any log file older than 1 day, and delete the original file. The last step is to just write the name the file that was just zipped to an audit log.

public static void zipFiles() { try { foreach (string file in Directory.EnumerateFiles(homeDirectory, "*.log", SearchOption.AllDirectories).Where(d => d.Contains("W3SVC")).ToArray()) { FileInfo fi = new FileInfo(file); if (fi.LastWriteTime < DateTime.Now.AddDays(-1)) { Compress(fi); fi.Delete(); activityLog("Zipping: " + file); } } } catch (UnauthorizedAccessException UAEx) { activityLog(UAEx.Message); } catch (PathTooLongException PathEx) { activityLog(PathEx.Message); } }

I only needed 30 days worth of log files to be left on the server. In the snippet below I delete any zipped log files matching my date criteria and then write the activity to the audit log.

public static void deleteFiles() { try { foreach (string file in Directory.EnumerateFiles(homeDirectory, "*.gz", SearchOption.AllDirectories).Where(d => d.Contains("W3SVC")).ToArray()) { FileInfo fi = new FileInfo(file); if (fi.LastWriteTime < DateTime.Now.AddDays(-daysToSave)) { fi.Delete(); activityLog("Deleting:" + file); } } } catch (UnauthorizedAccessException UAEx) { activityLog(UAEx.Message); } catch (PathTooLongException PathEx) { activityLog(PathEx.Message); } }

Using an XML Config File

To help keep my application flexible I created a simple XML file to store the path to the WebsitePanel hosting spaces folder and the number of days of logs I wanted to keep on the server.

<?xml version="1.0" encoding="utf-8"?> <MyConfig> <HomeDirectory>D:\HostingSpaces</HomeDirectory> <DaysToSave>30</DaysToSave> </MyConfig>

Loading these values into my program is done by parsing the XML data using XmlDocument.

public static void readConfig() { string path = System.IO.Path.GetFullPath(@"logCleanConfig.xml"); XmlDocument xmlDoc = new XmlDocument(); xmlDoc.Load(path); XmlNodeList HomeDirectory = xmlDoc.GetElementsByTagName("HomeDirectory"); homeDirectory = HomeDirectory[0].InnerXml; XmlNodeList DaysToSave = xmlDoc.GetElementsByTagName("DaysToSave"); daysToSave = Convert.ToInt32(DaysToSave[0].InnerXml); }

Running the Program

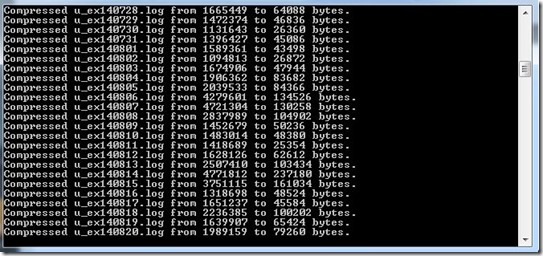

Running the program from a command line I can see the progress as files are compressed. Afterwards I setup a scheduled task so it will run every day to compress new IIS log files and purge the ones older than 30 days.

A few days later I came across several sites on the server using CMS products with their own application logs. These log files were not being cleaned up by the site owners and taking up free space on the server so I decided to archive them as well. Since they were being stored within each site in subfolder called “Logs”, tweaking the searchOption parameters of the Directory.EnumerateFiles method would catch them. With a quick rebuild of the program, it would now compress the CMS log files as well as the IIS log files.

foreach (string file in Directory.EnumerateFiles(homeDirectory, "*.log", SearchOption.AllDirectories).Where(d => d.Contains("W3SVC") || d.Contains("Logs")).ToArray())

In Summary

IIS logs contain wealth of data about web site traffic and performance. Left unchecked over time the logs will accumulate and take up disk space on the server. Using Windows folder compression is a simple solution to reclaim valuable disk space. If your log files are different locations then leveraging the power of ASP.NET with Directory.EnumerateFiles and GZipStream will do the trick. Thanks for reading.