Blocking SQL Injection with IIS Request Filtering

SQL Injection became a favorite hacking technique in 2007. Despite being widely documented for so many years it continues to evolve and be utilized. Because SQL Injection is such a well known attack vector, I am always surprised when as sysadmin I come across someone’s site that has been compromised by it. In most instances the site was compromised because of not properly validating user data entered on web forms. Classic ASP sites using inline SQL queries with hardcoded query string parameters are especially vulnerable. Fortunately regardless of a site’s potential programming weaknesses it can still be protected. In this walkthrough I will cover how to protect your site from SQL Injection using IIS Request Filtering.

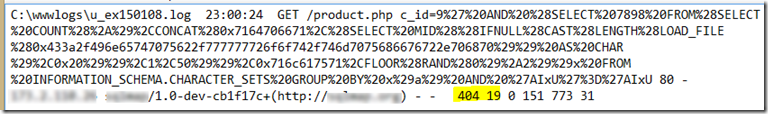

Identifying SQL Injection Requests

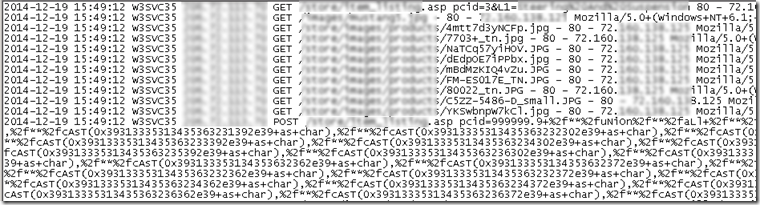

Most people find out about SQL Injection the hard way after their web site has been defaced or their database has been compromised. If you are not sure your site has been attacked you need look no further than your web site’s traffic logs. Upon visual inspection of the IIS site logs you will see blocks of legitimate requests interspersed with the malicious requests. Checking the HTTP status code at the end of each request will indicate if there is any issue. If the status code was a 404 or a 500, you know the malicious request didn’t work. However, if the request had a HTTP status code of 200 then you should be concerned.

Using Log Parser to Find SQL Injection Requests

Looking through web logs for malicious requests can be a tedious and time consuming. Microsoft’s wonderful log analysis tool Log Parser makes it easier to identify pages on your site that are being targeted by SQL Injection attacks. Running the query below will create a report of all the page requests in the log with query string parameters.

logparser.exe "SELECT EXTRACT_FILENAME(cs-uri-stem) AS PageRequest, cs-uri-query, COUNT(*) AS TotalHits FROM C:\wwwlogs\W3SVC35\u_ex141219.log TO results.txt GROUP BY cs-uri-stem, cs-uri-query ORDER BY TotalHits DESC"

Using Findstr to find SQL Injection Requests

Using Log Parser to identify malicous requests is helpful however if you need to look at multiple sites’ logs the task becomes more challenging. For these situations I like to utilize Findstr. Findstr is a powerful Windows tool that uses regular expressions to search files for any string value. One powerful feature is that you can store your search strings in separate files and even exclude certain strings from being searched. In the example below, I use the /g parameter to have Findstr load a file named sqlinject.txt with my predefined string and then search all the web logs in the W3SVC1 folder. The output is redirected to a file called results.txt.

findstr /s /i /p /g:sqlinject.txt C:\wwwlogs\W3SVC1\*.log >c:\results.txt

Using this syntax it is easy to extend the capability of Findstr by creating a simple batch file with all the web log folders on your server. Once setup you will be able to any identify SQL Injection requests against your server within minutes.

Configuring IIS Request Filtering

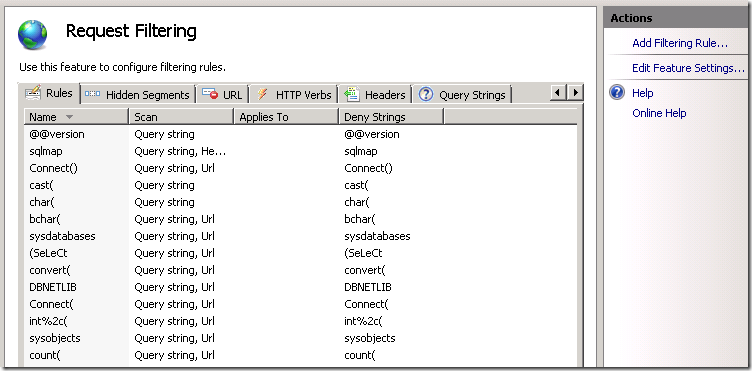

The Request Filtering module was introduced in IIS 7 as a replacement for the very capable Url Scan. Using Log Parser and Findstr you will be able to identify plenty of malicious requests attacking the web sites on your server. A Request Filtering rule can block requests based on file extensions, URL, HTTP Verbs, Headers, or Query Strings. Additionally you can block requests based on a maximum size of the query string and url length.

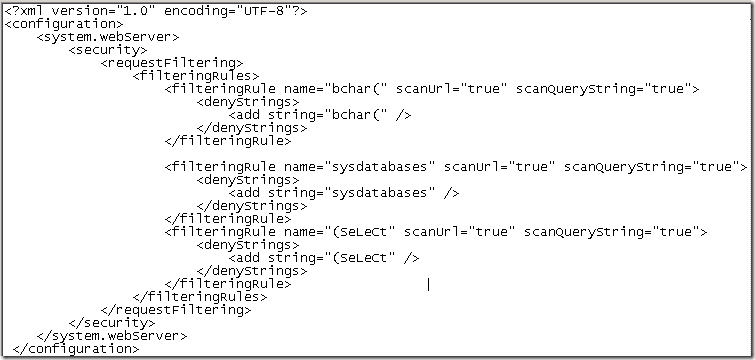

Like any other IIS module you can maintain the settings outside of IIS Manager by editing the web.config. The values are stored in the <requestFiltering> section within <system.webServer>

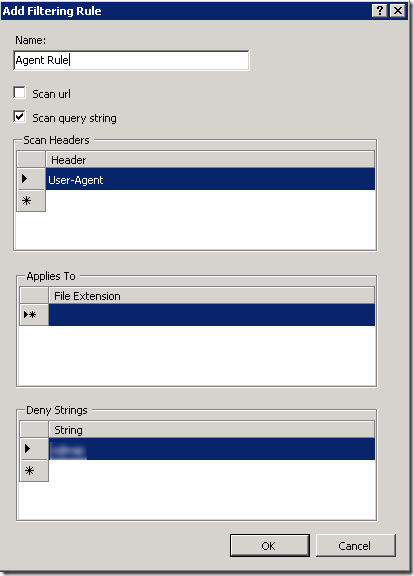

Filtering Rules

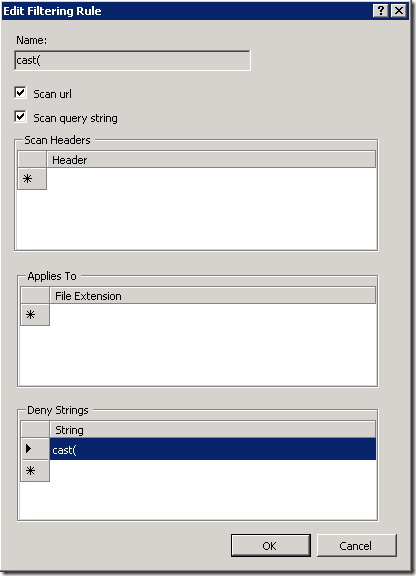

I typically create 1 rule for each Deny String that I want to block. You can add multiple strings on each rule however I find it easier to maintain when only using 1 string per rule. This way if a rule is inadvertently blocking legitimate requests you can quickly disable it while leaving the other ones operational. The request url or query string can scanned for Deny Strings. However, enabling url scanning requires a bit of forethought because if the Deny String matches any part of the name of a page on your site, requests to that page will be blocked. matches . For example if you want to block requests containing the SQL command “update” but there happens to be page called update.aspx on your site, any request to update.aspx will be blocked.

If I had to pick only 1 Deny String for filtering it would be cast(. Nearly every SQL Injection request I’ve seen uses cast( and no legitimate page or query string parameter should have this name.

404 Status Codes

Any request blocked by Request Filtering will return a 404 error status with a specific substatus to identify the reason it was denied. A few common ones are listed below.

| HTTP Substatus | Description |

| 404.5 | URL Sequence Denied |

| 404.14 | Url Too Long |

| 404.15 | Query String Too Long |

| 404.18 | Query String Sequence Denied |

| 404.19 | Denied by Filtering Rule |

With Request Filtering is enabled, it is easy to keep an eye on blocked requests. The Log Parser query below will create a report of all the requests with HTTP status 404 and substatus greater than zero.

logparser.exe "SELECT EXTRACT_FILENAME(cs-uri-stem) AS FILENAME, cs-uri-query, COUNT(*) AS TotalHits FROM C:\wwwlogs\W3SVC35\u_ex141219.log TO results.txt WHERE (sc-status = 404 AND sc-substatus > 0) GROUP BY cs-uri-stem, cs-uri-query ORDER BY TotalHits DESC

Blocking User Agents

While investigating a recent SQL Injection attack I noticed in the IIS logs that the site had been compromised by an automated tool. It was interesting to see how the first malicious request was very basic and then each subsequent one became more elaborate with complex SQL queries. What I found even more curious was that each request used the same User-Agent which in fact identified the name of the tool and where to download it.

Their web site clearly states the creators of the tool released it with the intention of helping system administrators discover vulnerabilities. Unfortunately it’s far too easy for someone to use it with malicious intent. The good news is that blocking requests based on the User-Agent is quite easy. You just need to create a new rule and specify User-Agent in the header and then the name of the agent in the Deny Strings. As you can see by the 404.19 status in the picture above the automated tool was successfully blocked the next time around after the rule was added.

In Summary

SQL Injection is a popular attack vector for web sites but by leveraging IIS Request Filtering the malicious requests can be easily blocked. Using Log Parser and Findstr you can quickly check your site’s logs to identify suspicious activity. Thanks for reading.