DPM: How to plan for protecting VMs in private cloud deployments

Windows 2012 help customers in maximize their ROI of their hardware investments and manage them efficiently. Windows 2012 can support various Hyper-V deployments including Hyper-V over CSV, Hyper-V over Remote SMB and Hyper-V Stand alone. This provides efficient and flexible deployment capability that meets various customer needs.

Windows 2012 Hyper-V cluster can have as many as 64 nodes and deploy 8000 VMs in the cluster. Along with the compute enhancements, Hyper-V can have its storage on CSV cluster or local attached storage or Remote SMB (cluster/standalone).

There are various parameters that need to be considered when architecting the private cloud deployments and its protection. This document discusses various aspects that need to be considered for this and are listed below.

- DPM – Hyper-V support matrix

- Storage and colocation

- Network speeds

- Backup impact on CSV

- Parallel backups

DPM – Hyper-V support matrix

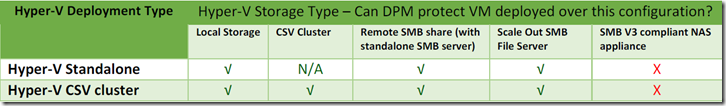

DPM has enhanced its data protection capabilities to protect various Hyper-V deployments. Following table provides list of Hyper-V deployment combinations and DPM capability to protect them.

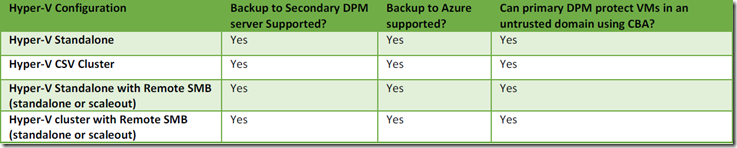

Note that the Certificate Based Authentication (CBA) that is used to protect PS and DPM residing in untrusted domains can only be used in case of primary DPM server. Secondary DPM Server and Primary DPM need to be on trusted domain servers.

SC 2012 SP1 – DPM enhanced its “express full” capabilities to all of the above supported deployments. This means that DPM can track all changes that are happening on the VM as they happen and at backup time, DPM reads these changed blocks and sends them across to DPM server.

DPM can protect these large cluster deployments by leveraging the DPM’s scale out feature discussed here and can reduce backup storage savings by excluding pagefile in backup content as discussed here. DPM can now protect 800 VMs with 100GB average VM size, once a day backup and 3% net churning. While this scale is literally 4 times the size that of prior DPM versions can handle, this scale is not sufficient enough to protect large clusters. With the scale out feature, a cluster can be protected by multiple DPM servers. While DPM scale out can protect these large clusters, various scale numbers need to be considered for backup configuration. All of them are discussed in detail here.

Diff Area Limit of 40TB per DPM Server

DPM simplifies the recovery and backup by creating two volumes called replica volume and shadow copy volume for each data source(s) depending on the colocation feature leveraged by the user. Replica volume always carry replica of last snapshot that was backed up. The Shadow copy volumes will carry the differentials between various snapshots. This is done by leveraging Windows VolSnap capabilities. With the VolSnap capability, one can retrieve any Point In Time (PIT) without applying prior PITs. DPM has been tested with 40TB of total shadow copy volume space within the DPM server. Note that DPM’s diff area will be total of each data source size, churning per backup, number of backups per day, number of retention days. For ex., if a DPM server protects 800 VMs, with 100GB average VM size, 3% net churning per day, once a day backup, 14 days retention period, total diff area required for this DPM server is 33.6TB (VM Count * Average VM Size * Net Churn Per Day * retention period).

Replica Volume Limit of 80TB per DPM Server

As discussed above, DPM maintains the Replica Volume that carries replica of last snapshot that was backed up. DPM has been tested with 80TB of total Replica Volume meaning all data sources combined together can be 80TB in total size. In the above example of 800 VMs, with 100GB average VM size, total Replica Volume size would be 80TB.

Windows LDM limits and DPM data source colocation

Windows can have as many as 1000 volumes per server. As mentioned above each data source(s) will need two volumes. Considering the LDM limit of 1000 volumes, DPM has introduced capability of colocation where multiple data sources can be located in one set of replica and shadow copy volume set. So, DPM can be configured to add multiple VMs in one protection group and opt-in for colocation meaning that these VMs will be tried to be allocated in one set of replica and shadow copy volume set. In order to achieve this, each replica volume will be allocated 350GB by default and number of VMs that can fit into this replica volume will be included. So, even when colocation is opted for and VMs might be located in different volumes. For ex., if two VMs are protected one VM is 300 GB and another VM is 100 GB, each VM will have its own replica and shadow copy volume set. The 350GB value is limited to avoid over/under provisioning for storage for replica volumes. This value can be changed by updating the registry entry as shown below.

Key name: HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft Data Protection Manager\Collocation\HyperV

Valuename: CollocatedReplicaSize and should be a multiple of 1GB (1073741824 bytes)

DPM Agent Transfer Capabilities

DPM server interacts with the Production Server (PS), Hyper-V node in this case, by installing a DPM agent on the PS. In this case, DPM agent will be installed on Hyper-V machines to protect the VMs. DPM agent is responsible for copying all the backup content to corresponding DPM server. DPM agent has been tested to send data at a speed of 1Gbps. So, when configuring cluster and protecting these VMs across multiple DPM servers, agent transfer should not be accounted for more than 1Gbps across all DPM servers.

Remote SMB deployment and its requirements

In all Hyper-V configurations except Hyper-V over Remote SMB, PS and its storage is on a single box and so DPM agent on the PS can read the data from the storage subsystem and transfer it to DPM server. In case of Hyper-V over Remote SMB Server, DPM agents need to be installed on both Hyper-V server(s) and Remote SMB file server(s). DPM agent on the Hyper-V server need to fetch data by interacting with DPM agent on the Remote SMB server. This means that if there is a 1GB data that need to be transferred from PS (Hyper-V node), PS need 2GB of network bandwidth 1GB to read from Remote SMB and 1GB to send to DPM server. DPM agent’s data transfer limit of 1Gbps should be counted only against the data that is to be sent to DPM server and should exclude network consumption to fetch data from Remote SMB.

Backup Impact on CSV

DPM server triggers a backup job on PS server by interacting with DPM agent on the PS. DPM agent on PS will trigger a snapshot which will in turn trigger app consistent snapshot inside the VM (via Hyper-V VSS writer) and finally creating a snapshot. DPM express full driver (will be installed at DPM agent installation time) will track changes on machine where the storage is present. This means that in case of CSV, DPM express full driver tracks changes on all nodes of the Hyper-V cluster. If the storage comes out of Remote SMB scale out cluster, DPM express full driver on Remote SMB scale out nodes will track the changes.

Whenever a snapshot is taken on a VM, snapshot is triggered on the whole CSV volume. As you may know, snapshot means that the data content at that point in time are preserved till the lifetime of the snapshot. DPM keeps snapshot on the volume on PS till the backup data transfer is complete. Once the backup data transfer is done, DPM removes that snapshot. In order to keep the content alive, any subsequent writes to that snapshot will cause volsnap to read old content, write to a new location and write the new location to the target location. For ex., if block 10 is being written to a volume where there is a live snapshot, VolSnap will read current block 10 and write it to a new location and write new content to the 10th block. This means that as long as the snapshot is active, each write will lead to one read and two writes. This kind of operation is called Copy On Write (COW). Even though the snapshot is taken on a VM, actual snapshot is happening on whole volume. So, all VMs that are residing in that CSV will have the IO penalty due to COW. So, it is advisable to have as less number of CSVs as possible to reduce the impact of backup of this VM on other VMs. Also, as a VM snapshot will include snapshot across all CSVs that has this VM's VHDs, the less the number of CSVs used for a VM the better in terms of backup performance.

In case of CSV, any one node of the CSV can be owner of the CSV meaning all important operations like file system metadata changes can be done only by this owner node. All other nodes are non-owner node in regards to this CSV. A node can own multiple CSVs and a CSV can be owned by only one node. All non-owner nodes will need to send out any file system metadata changes via network to owner node and owner node will commit the changes to disk. All non-metadata data transfer will be directly written to the disk by any node. If a VM is being backed up by DPM agent on a non-owner node, all backup reads will be driven through the network reads from non-owner node to owner node. So, there will be network consumption for each backup data read in this case. For any VM backup, snapshot and data backup is driven from wherever the VM is actively running. So, to keep the owner/non-owner network traffic low, it is advisable to keep the VM affinity to the node that owns its storage CSV volume. This will reduce over the wire network traffic.

All of the owner node, non-owner node traffic and IO redirection traffic is reduced if the hardware VSS provider deployed on the system.

Parallel Backups

DPM triggers 3 jobs at a time to any node by default. This per node number can be changed by changing the registry key “Microsoft Hyper-V” (which is a DWORD value and set the appropriate value) located at “HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft Data Protection Manager\2.0\Configuration\MaxAllowedParallelBackups”. Also, a node by default configured to do 8 backup jobs at any given time. This means that if there is a 64 node cluster protected by 5 DPM servers, each node can still honor 8 job parallel requests in total that can come from all or some of DPM servers. This value can be changed by following steps discussed @ the end of the blog article located here.

Below table describes the maximum number of jobs that DPM schedules at any given moment.

Hyper-V Replication and DPM protection

Windows 2012 introduced Hyper-V replication (HVR) technology where VMs can be replicated as frequent as 15 minutes so that the VMs can be failed over to remote site in case of disaster. As the very reason for DR is to provide an offsite failover in case of emergency, DR site is located on an offsite location. As backup is expected to be located on the same premises, VM protection should be configured on primary VM and not on replica VM.

When planning for protecting VMs over Hyper-V cluster, all of the above parameters need to be considered in detail. Lot of parameters are dependent on the VM size, churning per day, retention period, number of CSVs and number of VMs on the CSVs etc.