Faster IIS Web Sites Provisioning using Microsoft Web Administration

Yesterday I got an email about some performance numbers that one of our customers were running into when creating remotely Web Sites, Applications, Application Pools and other tasks in IIS using Microsoft.Web.Administration. In case you don't know Microsoft.Web.Administration is a .NET library that exposes the IIS Configuration System.

The code was using Microsoft.Web.Administration from PowerShell to create a single Application Pool, a new Web Site, and finally assign the Root Application to the new Application Pool, and it was measuring each of the tasks. The application then was called continually till 10,000 sites were created. They then created a very nice Excel Spreadsheet that had a chart with how long it took when performing each operation that clearly show how fast (or slow) things were depending on the existing number of sites in IIS.

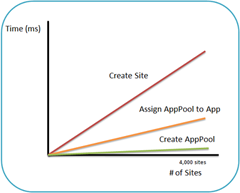

Funny enough the code was doing almost exactly what we use to measure in IIS 7.0 performance while provisioning. And that we ran into very similar numbers back a couple of years ago but we probably never share them. The results back in the day were showing a trend like the one below, where the Creation of the AppPool was quite fast (less than 400ms once you had 10,000 sites), the Application Pool assignment was taking almost 10 times more (almost 4 seconds) and the creation of the Sites was taking almost 20 times more (up to 8 seconds).

Our test code looked something like this (removing all the Timing code):

ApplicationPool pool = m.ApplicationPools.Add("MyPool");

Site site = m.Sites.Add("MySite", ... "d:\inetpub\wwwroot");

site.Applications["/"].AppPoolName = "MyPool";

m.CommitChanges();

}

Back in those days (2005) the numbers were even worst, you would have to wait literally minutes to get a site created but we profiled it and understood exactly what the performance behavior was and we made it better.

Here is how to make it faster

- Most importantly batch as many changes you need to do to our configuration system so that you only CommitChanges once, for example, creating the 10,000 sites and only Committing once will probably average 1,000 sites per second. More importantly you will reduce the load on the server since every time the configuration is changed our server has to process its changes and that incurs in other CPU cycles in WAS and W3SVC.

- The reason we noticed Application Pools was being slower at the beginning when using ServerManager locally was just an artifact of the fact that it’s the first operation being done in our Test Script. The reason is that when you create a ServerManager in reality nothing interesting happens other than we activate a COM object that parses the schema, however whenever you request a section that is when the real XML parsing of files happens. This means that the first operation (in this case accessing the AppPool collection) pays the price of parsing machine.config, root web.config and ApplicationHost.config, which is the file that grows considerably as new sites/pools are added.

- Definitely using ServerManager remotely (OpenRemote) will be several times slower because every time property is set or anything is changed the code actually hits the network to set the values in the remote objects, since on the client there are only client proxies. This means that setting appPool.Name actually goes through the network to set the values on the dllhost.exe hosting DCOM.

- Finally the most important one, the reason the Site creation is extremely slow is because the way our test code was creating the site and the application, in particular we are using the method m.Sites.Add() which has several overloads, where most of them provide a set of "friendly-non-performant” combinations. This is due to the fact that none of them receives a Site ID which forces us to automatically calculate one every time a Site will be added. We have implemented two algorithms for this (depending on a registry key called IncrementalSiteIDCreation) and both have the issue that we need to read all the sites first to make sure we don’t generate an ID that is already in use. This means that if you have 4,000 sites we have to read all 4,000 sites and that of course over the network translates to literally thousands of round trips.

So bottom line use the m.Sites.CreateElement() followed by a m.Sites.Add(newSite) which will not create an ID for the Site that will not incur in the costs mentioned. Something like the snippet below. Notice that there is a little bit more code since you also need to create the Root Application and set the bindings, but this will proof useful since setting the AppPoolName requires the App anyway and you save all the Lookups on the Sites list as well.

ApplicationPool pool = m.ApplicationPools.Add("MyPool");

Site site = m.Sites.CreateElement("site");

site.Name = "MySite"

site.ID = "MySite".GetHashCode();

Application app = site.Applications.Add("/", "d:\inetp...");

app.AppPoolName = "MyPool";

m.CommitChanges();

}

After all that story, what is important to realize is that there are ways to make this way faster and that you should use them if you are going to do any sort of massive creation of sites. With the two changes outlined above (Specify the Site ID yourself and don't lookup the Site again to get the App) the results were incredible we were back to under 350ms for all the tasks.