IIS Smooth Streaming Format SDK - Sample Application

The IIS Smooth Streaming Format SDK is a library of code for encoder applications to pack media samples in the IIS Smooth Streaming container format (fragmented MP4 format) and generate the corresponding server and client manifests. The output of the SDK can be either written to files for media-on-demand scenarios or pushed to a live publishing point on the IIS server for immediate distribution.

The SDK’s installation package comes with an example of a basic muxing application. This application uses the SDK to generate the Smooth Streaming format files and manifests from a set of media files encoded in WMA, WMV/VC-1, H.264 or AAC-LC formats. The source code for this application serves as a functional example of how to use the SDK to mux Smooth Streaming content.

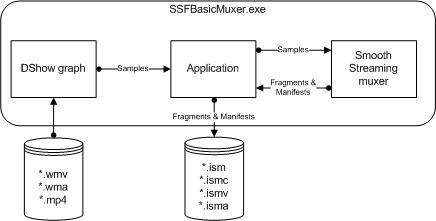

Here’s a very high-level view of the main blocks in the sample application:

The application uses the Microsoft DirectShow (DShow) application programming interface to grab the media types and compressed media samples from the input files. It then feeds those types and samples to the muxer object from the IIS Smooth Streaming Format SDK. Finally, the application takes the resulting fragmented MP4 chunks and manifest data generated by the muxer and writes them to the corresponding files (.ismv, .isma, .ismc or .ism).

Note: DirectShow does not ship with H.264 or AAC-LC filters. To enable these formats in the sample application you will need to install DShow filters for them.

1. Parse the input parameters

The application takes a text file as input. This text file contains a list of the media files and corresponding bitrates to mux, one media file per line. The format of each line is:

<bitrate> , <filename>

For example, the text file could contain a list like this:

904000, Movie_1.wmv

760000, Movie_2.wmv

580000, Movie_3.wmv

77000, Sound_1.wma

I will not go into the details of how the input file is parsed, but I should explain why the sample application requires users to input the bitrates explicitly. In theory the application could get the bitrate property directly from DShow, but the fact is that exposing this property is optional for DShow filters, and not every one of them does it, so to be more generic while maintaining the code simple the application requires the bitrate information as an explicit parameter.

2. Setup the media pipeline

The next step is to set up the input pipelines in a DShow graph, one branch for each input file. The pipeline will output the compressed elementary stream media samples to the application which will in turn feed them to the muxer. For each branch of the pipeline the application uses a custom “sample grabber” DShow filter with a simplified callback interface to receive the compressed samples. The default sample grabber filter that ships with the DirectShow API does not handle all the media types the application targets, hence the need for a custom filter. This custom filter comes in the dshowhlp.lib static library that accompanies the sample code. This library is intended for use in this example only and should not be used or re-distributed in any other applications.

The grabber filter has a single input pin that accepts any media type. Samples received in this pin are relayed to the application’s callback.

The interesting code to look at here is the StreamContext_AddToDShowGraph function, which sets up the DShow branch of the pipeline for each input file. Let’s take a look.

//------------------------------------------------------------------------------

HRESULT StreamContext_AddToDShowGraph(

__inout StreamContext* pCtx,

__in IGraphBuilder *pIGraph )

{

HRESULT hr = S_OK;

IBaseFilter* pBaseFilter = NULL;

IPGI* pGrabber = NULL;

First we create a grabber filter for the file and stick it in the DShow graph. PGI_CreatedInstance is defined in dshowhlp.lib.

hr = PGI_CreateInstance( IID_IPGI, (void**)&pGrabber );

if( FAILED(hr) )

{

printf( "Error 0x%08x: failed to create sample grabber filter", hr );

goto done;

}

hr = pGrabber->QueryInterface( IID_IBaseFilter, (PVOID*)&pBaseFilter);

if( FAILED(hr) )

{

goto done;

}

hr = pIGraph->AddFilter( pBaseFilter, NULL );

if( FAILED(hr) )

{

printf( "Error 0x%08x: failed to add sample grabber to graph", hr );

goto done;

}Next we ask the DShow graph to render the input file. This means adding that file to the pipeline. DShow will instantiate the proper source filter for the file’s type and try to pull the necessary filters to complete the pipeline for that source. Since the grabber is preloaded in the graph, DShow will try it first, and because the grabber accepts all media types, DShow will go ahead and connect the source’s first output pin to the grabber’s only input pin.

Note this implies the source can have only one output pin. A limitation of this code example is it requires the input files to be single-stream. If there more than one media stream per file, let’s say an audio and video stream, DShow will connect the first to the grabber filter and then try to load default filters to connect the remaining streams. This can result in weird behavior like, for instance, the tool playing a stray video stream on the screen.

To support multiple streams per file would require constructing the pipeline programmatically. In other words, we would have to instantiate the source filter, enumerate its output pins, and connect each to a grabber filter. That would complicate the code more than its purpose.

hr = pIGraph->RenderFile( pCtx->szInputFileName, NULL );

if( FAILED(hr) )

{

printf( "Error 0x%08x: failed trying to open file in DShow graph", hr );

goto done;

}

if (FALSE == IsConnected(pBaseFilter))

{

printf( "Error 0x%08x: failed to connect sample grabber", hr );

goto done;

}

Once the new branch of the pipeline is connected, we register the application’s callback in the grabber filter. That’s the callback the grabber will call to deliver the compressed samples.

hr = pGrabber->set_Callback2( HandleSampleHook, pCtx );

if( FAILED(hr) )

{

printf( "Error 0x%08x: failed to set the IPGI callback", hr );

goto done;

}

Finally we extract the media type of the new branch, which we’ll need later to setup the SDK’s muxer. I won’t go into detail on how to get that media type. Hopefully the code is self-explanatory.

hr = StreamContext_SetTypeInfoFromRenderer(

pCtx,

pBaseFilter );

if( FAILED(hr) )

{

goto done;

}

done:

SAFE_RELEASE( pBaseFilter );

SAFE_RELEASE( pGrabber );

return ( hr );

}

//------------------------------------------------------------------------------

3. Create the SSF muxer and set it up

Once the input pipeline is set up, the next step is to instantiate the muxer object from the IIS Smooth Streaming Format SDK. Let’s look at the code.

First we use SSFMuxCreate to create the muxer.

hr = SSFMuxCreate( &hSSFMux );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to create SSF muxer\n", hr );

goto done;

}

Then we configure the basic options.

UINT64 timeScale = 10000000; // time scale in HNS

hr = SSFMuxSetOption( hSSFMux, SSF_MUX_OPTION_TIME_SCALE, &timeScale, sizeof(timeScale) );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to set SSF_MUX_OPTION_TIME_SCALE\n", hr );

goto done;

}

BOOL fVariableBitRate = TRUE;

hr = SSFMuxSetOption( hSSFMux, SSF_MUX_OPTION_VARIABLE_RATE, &fVariableBitRate, sizeof(fVariableBitRate) );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to set SSF_MUX_OPTION_VARIABLE_RATE\n", hr );

goto done;

}Finally we add one stream in the muxer for each input file. We need to add all streams we intended to mux upfront.

//

// Add streams to the muxer

//

for( ULONG i = 0; i < cStreams; ++i )

{

hr = AddStreamToMux(

&rgStreams[i],

hSSFMux,

( AUDIO == rgStreams[i].eStreamType ) ? cAudioStreams++ : cVideoStreams++ );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to set add stream to mux\n", hr );

goto done;

}

rgStreamIndices[i] = rgStreams[i].dwStreamIndex;

}

Let’s take a look in AddStreamToMux to see what it does:

//------------------------------------------------------------------------------

HRESULT AddStreamToMux(

__inout StreamContext* pCtx,

__in SSFMUXHANDLE hSSFMux,

__in ULONG iStream )

{

HRESULT hr = S_OK;

SSF_STREAM_INFO streamInfo;

memset( &streamInfo, 0, sizeof(streamInfo) );

pCtx->hSSFMux = hSSFMux;

Here we format the archive name for the stream. We use “Audio<n>.isma” for audio streams and “Video<n>.ismv” for video streams. Basically that’s the name of the ISM file to contain the media stream. The current version of the SDK allows one stream per ISM file only. Although the SDK does not write the fragmented MP4 data to the files directly, the data may contain offsets that impose assumptions on how the data is persisted to files, hence the limitation. The next version of the SDK (Beta 2) shall have API to synchronize offsets and give more freedom to the application to merge multiple streams in one ISM.

if( 0 > swprintf_s(

pCtx->szArchiveName,

MAX_PATH,

( pCtx->eStreamType == AUDIO ) ? L"Audio%d.isma" : L"Video%d.ismv",

iStream ) )

{

hr = E_UNEXPECTED;

goto done;

}

Next we choose how long each fragment will be. For simplicity we’ll fix the value at 5 seconds for audio streams and 2 seconds for video streams. We select this value here for convenience, but note that it’s not used directly by the SDK’s muxer. Instead, we’ll use it later in the callback function to decide where to split the fragments.

if( pCtx->eStreamType == AUDIO )

{

pCtx->rtChunkDuration = 50000000; // 5 seconds in hns units

}

else

{

pCtx->rtChunkDuration = 20000000; // 2 seconds in hns units

}

streamInfo.streamType = pCtx->eStreamType;

streamInfo.dwBitrate = pCtx->bitrate;

streamInfo.pszStreamName = pCtx->szArchiveName;

streamInfo.pTypeSpecificInfo = pCtx->pbTypeInfo;

streamInfo.cbTypeSpecificInfo = pCtx->cbTypeInfo;

streamInfo.wLanguage = MAKELANGUAGE('e','n','n');

Finally we add the stream to the muxer with SSFMuxAddStream. If successful, the API returns a stream index that we use to refer to this particular stream for now on.

hr = SSFMuxAddStream( hSSFMux, &streamInfo, &pCtx->dwStreamIndex );

if( FAILED(hr) )

{

goto done;

}

done:

return( hr );

}

//------------------------------------------------------------------------------

4. Get the Smooth Streaming headers

The next step is to get the headers for each stream and write them to the corresponding .ismv or .isma file. Note that SSFMuxGetHeader takes a list of stream indices. This is a provision to allow applications to merge multiple streams into a single .ismv or .isma file. However, due to the limitations mentioned before, that’s not fully supported in the Beta 1 version of the SDK.

Note that the sample application will re-write the headers later when it finalizes the files. This needs to be done because the contents of the headers change as the muxer processes media samples. In fact, the only reason why the sample application requests the headers at this early point is to reserve room for the final headers in the files. The contents of the headers change, but their total lengths do not. Later the application can just re-write the final headers without the need to shift the contents of the files.

for( ULONG i = 0; i < cStreams; ++i )

{

hr = SSFMuxGetHeader( hSSFMux, &rgStreams[i].dwStreamIndex, 1, &outputBuffer );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to get FMP4 header\n", hr );

goto done;

}

hr = StreamContext_WriteDataToFile(

&rgStreams[i],

L"wb",

outputBuffer.pbBuffer,

outputBuffer.cbBuffer );

if( FAILED(hr) )

{

printf( "Error 0x%08x: Failed to write header to FMP4 file\n", hr );

goto done;

}

}

5. Mux the Media Streams

It’s time to run the DShow graph and feed the incoming compressed samples into the muxer. That’s controlled by the Play function, a regular DShow run loop that starts the graph to play back the input streams through the grabber filters and then dispatches events from the graph until the playback ends.

The important code to look at here is the StreamContext_HandleSample function. This is the function that receives the samples and feeds them into the muxer. First let’s take a look at HandleSampleHook, the callback that the application hooks up to each grabber filter. This function maps the callback application data back to the StreamContext structure that binds an input stream in the graph to an output stream in the muxer.

//------------------------------------------------------------------------------

HRESULT WINAPI HandleSampleHook( __in void* pCtx, __in_opt IMediaSample* pSample )

{

StreamContext* pStreamCtx = (StreamContext*)pCtx;

return( StreamContext_HandleSample( pStreamCtx, pSample ) );

}

//------------------------------------------------------------------------------

Back to StreamContext_HandleSample, it feeds samples into the muxer by calling ProcessInput. If a fragment is complete, the function calls ProcessOutput to close that fragment and extract its data. Note that it is up to the application to decide when to close a fragment. This does not mean the application can close a fragment at any sample. The fragmented MP4 format requires fragments to contain full GOPs (Group of Pictures), yet the SDK does not attempt to detect these boundaries in order to enforce them – that is a responsibility of the application.

StreamContext_HandleSample uses the IsSyncPoint method of the incoming sample’s IMediaSample interface to establish GOP boundaries. Note, however, that all IsSyncPoint really does is to tell if the sample is an I-frame or not, yet not every I-frame needs to be a GOP boundary (unless the content was encoded that way). This implies the sample application only works with input files that have been encoded such that every I-frame is in its own closed GOP with no addition I-frames. Some H.264 content may contain an IDR frame at the beginning of the CVS (coded-video sequence), but it can also contain I-frames in the CVS prior to the next IDR frame. The sample application may generate invalid .ismv files for input files that are encoded with multiple I-frames in a GOP. This is a limitation of this example in particular. The SDK can handle the scenario correctly provided the application makes sure no GOP gets split in multiple fragments.

//------------------------------------------------------------------------------

HRESULT StreamContext_HandleSample(

__in StreamContext* pCtx,

__in_opt IMediaSample* pSample )

{

HRESULT hr = S_OK;

BOOL fProcessOutput = FALSE;

REFERENCE_TIME rtStartTime = 0;

REFERENCE_TIME rtStopTime = 0;

//

// Verify the conditions to pull a fragment for this stream

//

if( NULL == pSample )

{

//

// A null sample means the stream has ended.

// Pull the last fragment then.

//

fProcessOutput = TRUE;

}

else

{

//

// Pull a fragment if the sample is a sync point

// and the fragment duration has been reached

//

hr = pSample->GetTime( &rtStartTime, &rtStopTime );

if( FAILED(hr) )

{

goto done;

}

pCtx->rtChunkCurrentTime = rtStartTime;

Apart from looking for sync-point samples, the function also uses the fragment’s accumulated duration to decide when to close the fragment. A fragment gets closed right before the first sync-point sample that exceeds the expected duration (which in this example is 5 seconds for audio streams and 2 seconds for video streams). The sample that triggers this will be carried over to the next fragment.IMPORTANT: Before closing the current fragment we must call SSFMuxAdjustDuration with the current sample’s start time to update the duration of the fragment that is about to get closed. This step is necessary because certain media types do not provide accurate duration for the media samples, and to find a sample’s correct duration one needs to look at the start time of the sample that comes next. In cases like this the muxer does not know how to compute the fragment’s total duration solely from the media samples in that fragment; the muxer also needs the start time of the very next sample right after the last one that went in the fragment.

The SSFMuxAdjustDuration API needs to be called only on fragment boundaries.

Of course the other condition that causes a segment to get closed is when the end-of-stream arrives. This is indicated by a null pSample pointer.

if( ( S_OK == pSample->IsSyncPoint() )

&& ( pCtx->rtChunkCurrentTime - pCtx->rtChunkStartTime >= pCtx->rtChunkDuration ) )

{

fProcessOutput = TRUE;

hr = SSFMuxAdjustDuration( pCtx->hSSFMux, pCtx->dwStreamIndex, rtStartTime );

if( FAILED(hr) )

{

goto done;

}

}

}

Calling SSFMuxProcessOutput closes the current fragment and returns a buffer with the data to be appended to the corresponding .ismv or .isma file. The content of the buffer is valid only until the next call to SSFMuxProcessOutput for the same muxer. Applications should not hold this buffer more than necessary. If needed, applications must copy the data to a separate buffer.

//

// Pull a fragment if the conditions require

//

if( fProcessOutput )

{

SSF_BUFFER outputBuffer;

hr = SSFMuxProcessOutput( pCtx->hSSFMux, pCtx->dwStreamIndex, &outputBuffer );

if( FAILED(hr) )

{

goto done;

}

printf( "Stream #%d TS=%I64d Duration=%I64d\n",

pCtx->dwStreamIndex,

outputBuffer.qwTime,

outputBuffer.qwDuration );

++pCtx->dwChunkIndex;

hr = StreamContext_WriteDataToFile( pCtx, L"ab", outputBuffer.pbBuffer, outputBuffer.cbBuffer );

if( FAILED(hr) )

{

goto done;

}

pCtx->fChunkInProgress = FALSE;

}

The next step is to feed the sample into the muxer. That’s done through the SSFMuxProcessInput API. If a fragment has just been closed in the previous portion of the code, or if this is the very first sample for this stream, the API will start a new fragment for that stream.//

// Process the input sample if any

//

if( NULL != pSample )

{

SSF_SAMPLE inputSample = { 0 };

BYTE* pbSampleData = NULL;

hr = pSample->GetPointer( &pbSampleData );

if( FAILED(hr) )

{

goto done;

}

inputSample.qwSampleStartTime = (UINT64)rtStartTime;

inputSample.pSampleData = pbSampleData;

inputSample.cbSampleData = pSample->GetActualDataLength();

inputSample.flags = SSF_SAMPLE_FLAG_START_TIME;

if( pCtx->fSampleDurationIsReliable )

{

inputSample.qwSampleDuration = rtStopTime - rtStartTime;

inputSample.flags |= SSF_SAMPLE_FLAG_DURATION;

}

if( !pCtx->fChunkInProgress )

{

pCtx->rtChunkStartTime = rtStartTime;

pCtx->fChunkInProgress = TRUE;

}

hr = SSFMuxProcessInput( pCtx->hSSFMux, pCtx->dwStreamIndex, &inputSample );

if( FAILED(hr) )

{

goto done;

}

}

done:

return ( hr );

}

//------------------------------------------------------------------------------

It is not necessary to send the end-of-stream signal back to the main loop in the Play function. The DShow graph does that automatically once all input streams reach the end.

6. Finalize the .ismv and .isma files

To finalize a .ismv or .isma file the application needs to update the header and append the index that corresponds to that file.

Early on the application had already written a placeholder header to each file to reserve room for the final header. Since only the contents of the header changed during muxing, but not its total length, all the application has to do now is to call SSFMuxGetHeader once again to get the header for each individual stream and rewrite the data to the beginning of the file corresponding to that stream. The same goes for the index, the only difference is that the application uses SSFMuxGetIndex to get it and appends the data to the end of the file. The index contains indexing information for all the fragments in that file plus the closing MP4 atoms that mark the end of the file.

Note that SSFMuxGetIndex is similar to SSFMuxGetHeader in the sense that both can be used to obtain an index (or header) that combines multiple streams in a single file. As mentioned before, however, the Beta1 version of the SDK does not fully support the scenario due to other limitation.

7. Generate the Manifests

The last step in the sample application is to emit the server and client manifests. To get those pieces of data the application calls SSFMuxGetServerManifest and SSFMuxGetClientManifest, and then it writes the data to the corresponding manifest files.

Note that although the SDK has a Unicode API set, SSFMuxGetServerManifest and SSFMuxGetClientManifest in particular return the manifest text in ASCII format. This is to allow the application to write the data verbatim to the manifest files without the need for UTF8 or UTF16 encoding.

Another important thing to notice is that SSFMuxGetServerManifest takes a list of streams in the same way that SSFMuxGetHeader and SSFMuxGetIndex do. However, in this case the application does call the API passing in the entire set of streams. This is because the server manifest is supposed to contain the entire set.

8. Conclusion

Despite being a simple tool, the basic muxer sample application is a good example of the workflow that encoder applications need to follow when using the IIS Smooth Streaming Format SDK to generate on-demand Smooth Streaming files.