H.265/HEVC Ratification and 4K Video Streaming

OK, so maybe it was a shorter break from blogging than I expected. As it turns out the world does not stop when I change jobs. ;)

The media world today is abuzz with news of H.265/HEVC approval by the ITU. In case you’ve been hiding from NAB/IBC/SM events for the past two years – or if you’re a WebM hermit – I will have you know that H.265 is the successor standard to H.264, aka MPEG-4 AVC. As was the case with its predecessor it is the product of years of collaboration between the ISO/IEC Moving Picture Experts Group (MPEG) and the International Telecommunications Union (ITU) Video Coding Experts Group (VCEG). The new video coding standard is important because it promises bandwidth savings of about 40-45% for the same quality as H.264. In a world where video is increasingly being delivered over-the-top and bandwidth is not free – that kind of savings is a big deal.

What most media reports seem to have focused on is the potential effect that H.265 will have on bringing us closer to 4K video resolution in OTT delivery. Most reports speculate that H.265 will allow 4K video to be delivered over the Internet at bit rates between 20 and 30 Mbps. In comparison, my friend Bob Cowherd recently theorized on his blog that 4K delivery using the current H.264 video standard would require about 45 Mbps to deliver 4K video OTT.

While I think the relative difference between those two estimates is in the ballpark of the 40% bandwidth savings that H.265 promises, I actually think that both estimates are somewhat pessimistic. Given the current state of video streaming technology, I think we’ll actually be able to deliver 4K video at lower bit rates when the time comes for 4K streaming.

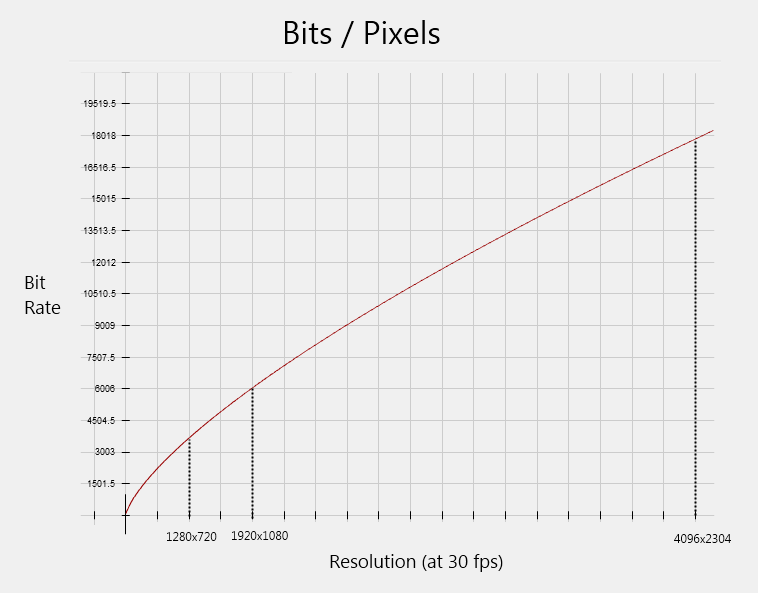

A common mistake that most people dealing with lossy video compression seem to make is to assume that the ratio between bit rate (bps) and picture size (pixels/sec) remains proportional and fixed as the values of both axis change. Based on my dozen+ years of experience working with digital video, I don’t think that’s the case. I believe that the relationship between bit rate and picture size is not linear, but closer to a power function that looks like this:

In other words, I believe that as the pixel count gets higher a DCT-based video codec requires fewer bits to maintain the same level of visual quality. Here’s why:

- The size of a 16×16 macroblock, which is the smallest unit of DCT-based compression used in contemporary codecs such as H.264 and VC-1, grows smaller relative to the total size of the video image as the image resolution grows higher. For example, in a 320×180 video the 16×16 macroblock represents 0.444% of the total image size, whereas in a 1920×1080 video the 16×16 macroblock represents only 0.0123% of the total image. A badly compressed macroblock in a 320×180 frame would therefore be more objectionable than a badly compressed macroblock in a 1920×1080 frame.

- As many studies have shown, the law of diminishing returns applies to video/image resolution too. If you sit at a fixed distance from your video display device eventually you will no longer be able to distinguish the difference between 720p, 1080p and 4K resolutions due to your eye’s inability to resolve tiny pixels from a certain distance. Ipso facto, as the video resolution goes up your eyes become less likely to distinguish compression artifacts too – which means the video compression can afford to get sloppier.

- Historically the bit rates used for OTT video delivery and streaming have been much lower than those used in broadcasting, consumer electronics and physical media. For example, digital broadcast HDTV typically averages ~19 Mbps for video (in CBR mode), while most Blu-ray 1080p videos average ~15-20 Mbps (in 2-pass VBR mode). Those kinds of bit rates are possible because those delivery channels have the luxury of either dedicated bandwidth or high-capacity physical media. However, in the OTT and streaming world video bit rate has always been shortchanged in comparison. Most 720p30 video streaming today, whether live or on-demand, is encoded at average 2.5-3.5 Mbps (depending on complexity and frame rate). 1080p30 video, when available, is usually streamed at 5-6 Mbps. Whereas Blu-ray tries to give us movies at a quality level approaching visual transparency, streaming/OTT is completely driven by the economics of bandwidth and consequently only gives us video at the minimum bit rate required to make the video look generally acceptable (and worthy of its HD moniker). To put it bluntly, streaming video is not yet a videophile’s medium.

So taking those factors into consideration, what kind of bandwidth should we expect for 4K video OTT delivery? If 1080p video is currently being widely streamed online using H.264 compression at 6 Mbps, then 4K (4096×2304) video could probably be delivered at bit rates around 18-20 Mbps using the same codec at similar quality levels. Again, remember, we’re not comparing Blu-ray quality levels here – we’re comparing 2013 OTT quality levels which are “good enough” but not ideal. If we switch from H.264 to H.265 compression we could probably expect OTT delivery of 4K video at bit rates closer to 12-15 Mbps (assuming H.265′s 40% efficiency improvements do indeed come true). I should note that those estimates are only applicable to 24-30 fps video. If the dream of 4K OTT video also carries an implication of high frame rates - e.g. 48 to 120 fps – then the bandwidth requirements would certainly go up accordingly too. But if the goal is simply to stream a 4K version of “The Hobbit” into your home at 24 fps, that dream might be closer to reality than you think.

One last thing: In his report about H.265 Ryan Lawler writes that “nearly every video publisher has standardized [H.264] after the release of the iPad and several other connected devices. It seems crazy now, but once upon a time, Apple’s adoption of H.264 and insistence on HTML5-based video players was controversial – especially since most video before the iPad was encoded in VP6 to play through Adobe’s proprietary Flash player.” Not so fast, Ryan. While Apple does deserve credit for backing H.264 against alternatives, they were hardly the pioneers of H.264 web streaming. H.264 was already a mandatory part of the HD-DVD and Blu-ray specifications when those formats launched in 2006 as symbols of the new HD video movement. Adobe added H.264 support to Flash 9 (“Moviestar”) in December 2007. Microsoft added H.264 support to Silverlight 3 and Windows 7 in July 2009. The Apple iPad did not launch until April 2010, which was also the same month Steve Jobs posted his infamous “Thoughts on Flash” blog post. So while Apple certainly did contribute to H.264′s success, they were hardly the controversial H.264 advocate Ryan makes them out to be. H.264 was already widely accepted at that point and its success was simply a matter of time.